Maximal Marginal Relevance to Re-rank results in Unsupervised KeyPhrase Extraction

最大邊際相關性在無監督關鍵詞萃取中的重排序應用

照片來源:Patrick Tomasso on Unsplash

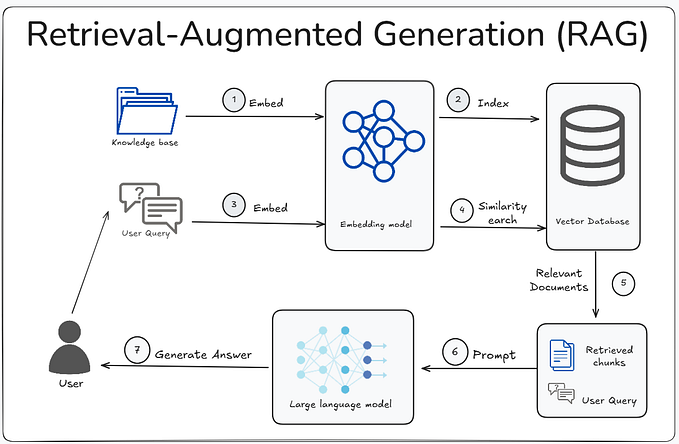

Maximal Marginal Relevance a.k.a. MMR has been introduced in the paper The Use of MMR, Diversity-Based Reranking for Reordering Documents and Producing Summaries. MMR tries to reduce the redundancy of results while at the same time maintaining query relevance of results for already ranked documents/phrases etc.

最大邊際相關性(MMR)首次被提出於論文《The Use of MMR, Diversity-Based Reranking for Reordering Documents and Producing Summaries》中。MMR 旨在降低結果的冗餘性,同時維持已排序文件、片語等的查詢相關性。

We first try to understand the scenario by taking an example and will see how MMR is helpful in solving the issue.

我們先透過一個例子來理解這個情境,並看看 MMR 如何有助於解決這個問題。

Recently I was trying to extract KeyPhrases from a set of documents that belongs to one category. I have used different approaches (TextRank, RAKE, POS tagging, etc.. to name a few) to extract keywords from the documents, which provides phrases along with score. This score is used as the ranking of the phrases for that document.

最近我嘗試從屬於同一類別的一組文件中提取關鍵詞組。我使用了不同的方法(如 TextRank、RAKE、詞性標註等)來從文件中提取關鍵詞,這些方法會為每個詞組提供一個分數。這個分數用來作為該文件中詞組的排名依據。

Let’s say your final keyPhrases are ranked like Good Product, Great Product, Nice Product, Excellent Product, Easy Install, Nice UI, Light weight etc. But there is an issue with this approach, all the phrases like good product, nice product, excellent product are similar and define the same property of the product and are ranked higher. Suppose we have a space to show just 5 keyPhrases, in that case, we don't want to show all these similar phrases.

假設你的最終關鍵詞組排名如 Good Product, Great Product, Nice Product, Excellent Product, Easy Install, Nice UI, Light weight etc. ,但這種方法有一個問題,所有像 good product, nice product, excellent product 這樣的詞組都很相似,並且都描述了產品的同一個特性,因此排名較高。假設我們只能顯示 5 個關鍵詞組,在這種情況下,我們不希望顯示所有這些相似的詞組。

You want to properly utilize this limited space such that the information displayed by the Keyphrases about the documents is diverse enough. Similar types of phrases should not dominate the whole space and users can see a variety of information about the document.

你會希望能夠妥善利用這有限的空間,讓關鍵詞組所展示的文件資訊足夠多元。相似類型的詞組不應該佔據所有空間,這樣使用者才能看到關於該文件的多樣化資訊。

關鍵詞組提取的排名

We are going to address this problem in this blog post. There might be different approaches to solve this problem. For the sake of simplicity and completeness of the article, I am going to discuss two approaches:

我們將在這篇部落格文章中討論這個問題。解決這個問題可能有不同的方法。為了讓文章簡單且完整,我將討論兩種方法:

- Remove redundant phrases using cosine similarity:

使用餘弦相似度移除重複片語:

To use cosine similarity is the naive approach that came to mind to deal with terms having the same meaning. Use word embeddings to find embeddings of phrases and find cosine similarity between embeddings. Set a threshold above which you will consider the terms as similar. Just take one keyPhrase having more score out of clubbed phrases in the result.

使用 cosine similarity 是最直接想到的方式,用來處理具有相同意義的詞彙。利用詞嵌入來取得片語的向量表示,並計算這些向量之間的餘弦相似度。設定一個閾值,超過這個閾值就認為這些詞彙是相似的。最後只保留分數較高的關鍵片語。

An issue with this approach is that you need to set the threshold (0.9 in code) above which, terms will be clubbed together. And sometimes very close keywords might have cosine similarity < threshold. Word embeddings have been used to convert the sentence to vector by averaging word tokens. Keeping the threshold low will lead to dealing with the same issue again. I find it difficult to manually tweaking this threshold to include all edge cases.

這種方法的問題在於你需要設定一個閾值(程式碼中為 0.9),超過這個閾值的詞彙才會被歸為一組。而有時非常接近的關鍵字可能會有 cosine similarity < threshold. 。Word embeddings 已被用來將句子轉換為向量,方法是對詞元取平均值。將 threshold 設得太低又會導致同樣的問題再次出現。我發現要手動調整這個閾值以涵蓋所有邊緣情況相當困難。

2. Re-Ranking the KeyPhrases using MMR

2. 使用 MMR 重新排序關鍵詞

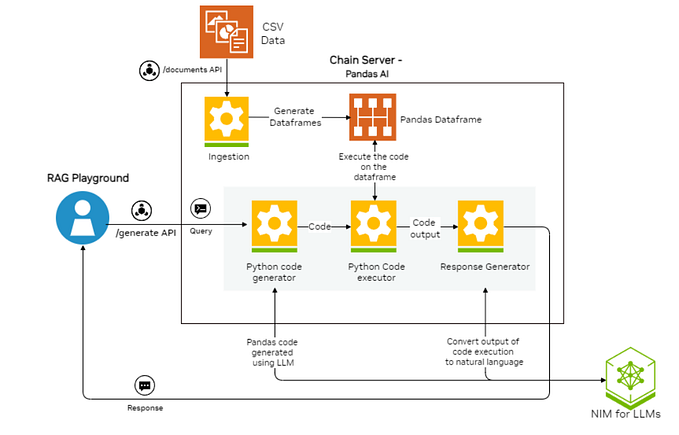

The idea behind using MMR is that it tries to reduce redundancy and increase diversity in the result and is used in text summarization. MMR selects the phrase in the final keyphrases list according to a combined criterion of query relevance and novelty of information.

使用 MMR 的想法在於它試圖減少冗餘並增加結果的多樣性,這在文本摘要中經常被使用。MMR 會根據查詢相關性和資訊新穎性的綜合標準,從最終的關鍵詞列表中選擇片語。

The latter measures the degree of dissimilarity between the document being considered and previously selected ones already in the ranked list. [1]

後者衡量的是正在考慮的文件與已經在排序列表中被選中的文件之間的不相似程度。[1]

MMR ranking provides a useful way to present information to the user that is not redundant. It considers the similarity of keyphrase with the document, along with the similarity of already selected phrases.

MMR 排序提供了一種實用的方法,能夠向使用者呈現不重複的資訊。它同時考慮了關鍵詞與文件的相似度,以及與已選擇片語的相似度。

最大邊際相關性

where, Q = Query (Description of Document category)

D = Set of documents related to Query Q

S = Subset of documents in R already selected

R\S = set of unselected documents in R

λ = Constant in range [0–1], for diversification of resultsIn the below implementation of MMR, cosine similarity has been considered as Sim_1 and Sim_2. Any other similarity measure can be taken and the function can be modified accordingly.

在下方的 MMR 實作中,餘弦相似度被視為 Sim_1 和 Sim_2 。也可以採用其他相似度衡量方式,並相應地修改函式。

最大邊際相關性

Setting λ to 0.5 gives the optimal mix of diversity and accuracy in the result set. The value of λ can be set based on the use-case and your dataset.

將 λ 設為 0.5 可以在結果集中達到多樣性與準確性的最佳平衡。λ 的值可以根據你的使用情境和資料集來設定。

MMR helps to address the issue by ranking similar phrases far away. So the issue to select top N keyPhrase has been resolved as all similar terms are not grouped and don’t appear in the final result.

MMR 有助於解決這個問題,因為它會將相似的片語排名拉開。因此,選擇前 N 個關鍵詞的問題已經被解決,因為所有相似的詞彙不會被分組,也不會出現在最終結果中。

Please let me know if you like the post, or have some suggestions/concerns and feel free to reach out to me on LinkedIn.

如果你喜歡這篇文章,或有任何建議/疑問,歡迎在 LinkedIn 上與我聯繫。

References: 參考資料: