Abstract 摘要

Nowadays, session-based recommendation plays an increasingly important role in the e-commerce field, which predicts the item that a user may click next time based on the sequence of user clicks. However, in real-world scenarios, due to various factors, there is noise in the process of user click behavior. For example, an unexpected click may not be the user's true intention and thus affect the user's behavior prediction. The current denoising methods have the following challenges: denoising directly from a single user's click sequence is not sufficient, the impact of unexpected clicks is not fully considered, and the additional information of other users is not fully utilized. To address these challenges, a new denoising dual sparse graph attention model for session-based recommendation abbreviated as DDSG, which not only considers the information in the current session, but also utilizes the information outside the current session. In the current session, this paper uses position encoding and gated neural networks that are biased towards frequency information to obtain the initial embedding, and uses the self-attention mechanism to model the target representation. In terms of denoising, we first iteratively denoise the representation obtained in the session, and perform sparse self-attention denoising on the session representation based on the target representation. Outside the current session, we take other sessions with similar interests to the current session to enhance the current session. Finally, the user's next click item is predicted by combining internal and extra information of the session. Experiments on three e-commerce datasets demonstrate our model exceeded the optimal SOTA model by 107%, achieving the highest performance and verifying the effectiveness of our model.

如今,基于会话的推荐在电子商务领域扮演着越来越重要的角色,它根据用户点击序列预测用户下一次可能点击的物品。然而,在现实场景中,由于各种因素的影响,用户点击行为过程中存在噪声。例如,意外的点击可能并非用户的真实意图,从而影响用户行为预测。当前的降噪方法存在以下挑战:直接从单个用户的点击序列中进行降噪是不够的,未预期点击的影响没有得到充分考虑,其他用户的附加信息没有得到充分利用。为了解决这些挑战,本文提出了一种新的基于会话的推荐降噪双稀疏图注意力模型,简称 DDSG。该模型不仅考虑了当前会话中的信息,还利用了当前会话之外的信息。在当前会话中,本文使用位置编码和偏向于频率信息的门控神经网络来获取初始嵌入,并使用自注意力机制来建模目标表示。 在去噪方面,我们首先对会话中获得的表示进行迭代去噪,并根据目标表示在会话表示上执行稀疏自注意力去噪。在当前会话之外,我们选取与当前会话具有相似兴趣的其他会话来增强当前会话。最后,通过结合会话的内部和外部信息来预测用户的下一个点击项。在三个电子商务数据集上的实验表明,我们的模型超过了最优的 SOTA 模型,提高了 107%,实现了最高的性能,并验证了我们的模型的有效性。

Similar content being viewed by others

其他人正在查看的内容

Explore related subjects

探索相关主题

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.通过机器学习推荐,发现相关主题的最新文章和新闻

Avoid common mistakes on your manuscript.

1 Introduction 1 引言

Today, recommendation systems have been widely used in the field of e-commerce and social networks. Recommendation systems analyze user behavior and preferences to provide the users with personalized recommendations, thus changing people's consumption behavior and shopping methods. The users are more inclined to make purchase decisions based on recommendation results rather than searching and screening products from scratch. Recommendation systems use the users' social relations and interaction records to provide them with relevant recommendations, which helps to strengthen social networks and enhance interaction and trust between the users. In today's era of booming digitalization, online shopping has become an indispensable part of people's daily lives. However, in this highly personalized consumer experience, a significant phenomenon is that a large number of the users freely shuttle between major e-commerce platforms without logging in, leisurely browsing a wide range of products, and clicking from time to time to get more details or add to the shopping cart. Although this behavior pattern brings convenience and privacy protection to the users, it also brings a challenge to e-commerce platforms: how to effectively capture and utilize the browsing behavior of these anonymous users to provide more accurate and personalized product recommendations.

今天,推荐系统在电子商务和社交网络领域得到了广泛应用。推荐系统通过分析用户行为和偏好,为用户提供个性化推荐,从而改变人们的消费行为和购物方式。用户更倾向于根据推荐结果做出购买决策,而不是从头开始搜索和筛选产品。推荐系统利用用户的社交关系和互动记录提供相关推荐,有助于加强社交网络,增强用户之间的互动和信任。在数字化蓬勃发展的今天,在线购物已成为人们日常生活不可或缺的一部分。然而,在这种高度个性化的消费体验中,一个显著的现象是,大量用户在未登录的情况下自由穿梭于各大电商平台之间,悠闲地浏览各种产品,不时地点击以获取更多详情或添加到购物车。 尽管这种行为模式给用户带来了便利和隐私保护,但也给电商平台带来了挑战:如何有效地捕捉和利用这些匿名用户的浏览行为,以提供更准确和个性化的产品推荐。

In order to meet this challenge, session-based recommendation (SBR) models came into being and gradually became a powerful tool to solve the anonymous user's recommendation problem [1]. Traditional recommendation systems often rely on historical data accumulated by users over a long period of time, including purchase records, browsing history, search keywords, etc., to build user portraits and predict their interest preferences. The SBR model takes a different approach, which focuses on capturing and analyzing the instant, anonymous, and short-term data streams generated by users during a single browsing session. Although these data do not contain user identity information, they can reflect the user's interest changes in products and potential purchase intentions in a short period of time. The SBR model uses complex algorithmic mechanisms such as recurrent neural networks (RNN), long short-term memory networks (LSTM) [2], or graph neural networks (GNN) to understand and model the sequential patterns [3]. It can identify the interest transfer paths shown by users when they click on products continuously, and predict the next products that the users may be interested in the current session. This instant and dynamic recommendation method not only improves the accuracy and timeliness of recommendations, but also greatly enhances the user experience, allowing users to enjoy personalized shopping suggestions even when they are not logged in.

为了应对这一挑战,基于会话的推荐(SBR)模型应运而生,并逐渐成为解决匿名用户推荐问题的一种强大工具[1]。传统的推荐系统通常依赖于用户长期积累的历史数据,包括购买记录、浏览历史、搜索关键词等,以构建用户画像并预测他们的兴趣偏好。SBR 模型采取了一种不同的方法,它专注于捕捉和分析用户在单个浏览会话期间产生的即时、匿名和短期数据流。尽管这些数据不包含用户身份信息,但它们可以在短时间内反映用户对产品的兴趣变化和潜在的购买意图。SBR 模型使用诸如循环神经网络(RNN)、长短期记忆网络(LSTM)[2]或图神经网络(GNN)等复杂的算法机制来理解和建模序列模式[3]。 它可以识别用户在连续点击产品时展现的兴趣转移路径,并预测用户在当前会话中可能感兴趣的下个产品。这种即时且动态的推荐方法不仅提高了推荐的准确性和时效性,而且极大地提升了用户体验,即使在用户未登录的情况下也能享受个性化的购物建议。

The core advantage of the framework based on recurrent neural networks is that it deeply models the temporal characteristics of sequence data, thereby effectively revealing the high-order dependencies and mutual influences between items in the sequence. However, this framework encounters challenges when processing session data, because although session data is sequential, its uniqueness lies in the complex transformation patterns between items. This nonlinear and variable transformation relation is crucial to understanding the overall structure of the session and user intentions, and traditional RNN models are unable to capture these subtle transformations. With the rise and widespread application of graph neural network technology, the researchers have pioneered the reconstruction of session data into the form of session graphs. This innovative move cleverly retains the rich pairwise transition information between items in the session, providing a new perspective for understanding user behavior paths. Although GNN may be slightly inferior to RNN in directly modeling long-distance dependencies, it has shown an incomparable advantage in capturing the immediate and accurate transition between the items, which is crucial to improving the accuracy of session analysis and recommendation.

该基于循环神经网络框架的核心优势在于它深度模拟了序列数据的时序特征,从而有效地揭示了序列中项目之间的高阶依赖和相互影响。然而,当处理会话数据时,这个框架会遇到挑战,因为尽管会话数据是序列性的,但其独特性在于项目之间的复杂转换模式。这种非线性且可变的转换关系对于理解会话的整体结构和用户意图至关重要,而传统的 RNN 模型无法捕捉这些细微的转换。随着图神经网络技术的兴起和广泛应用,研究人员开创了将会话数据重构为会话图形式的方法。这一创新举措巧妙地保留了会话中项目之间的丰富成对转换信息,为理解用户行为路径提供了新的视角。 尽管 GNN 在直接建模长距离依赖方面可能略逊于 RNN,但它捕捉项目之间即时准确过渡的优势无可比拟,这对于提高会话分析和推荐的准确性至关重要。

In order to make up for the shortcomings of GNN in global dependency modeling and further enhance its modeling ability, researchers have turned their attention to the attention mechanism, a technology that has proven its effectiveness in solving long-term dependency problems. By cleverly integrating the attention mechanism into the graph neural network framework to form the graph attention network (GAT) [4], the dynamic evaluation and weighting of nodes in the session graph is realized, which not only retains the advantage of GNN in capturing local transition relations, but also significantly enhances the ability to capture the overall structure of the session and interest patterns of the users. GAT-based models can therefore more accurately identify and respond to users’ immediate needs and potential interests in complex session data, promoting a new round of leaps in session analysis and personalized recommendation technology.

为了弥补 GNN 在全局依赖建模方面的不足并进一步增强其建模能力,研究人员将注意力转向了注意力机制,这一技术在解决长期依赖问题方面已证明其有效性。通过巧妙地将注意力机制整合到图神经网络框架中形成图注意力网络(GAT)[4],实现了会话图中节点的动态评估和加权,这不仅保留了 GNN 在捕捉局部转换关系方面的优势,还显著增强了捕捉会话整体结构和用户兴趣模式的能力。因此,基于 GAT 的模型可以更准确地识别和响应用户在复杂会话数据中的即时需求和潜在兴趣,推动会话分析和个性化推荐技术的新一轮飞跃。

When users interact with the platform, they may generate some behaviors that are inconsistent with their actual needs. For example, users may accidentally click on some content that they are not interested in, or generate abnormal behavior data in a specific situation, which will bring noise to the click sequence, which may confuse the recommendation model. Most of the existing methods denoise the weights of items in the sequence. These methods are not only too simple and cannot be directly applied to all denoising scenarios, but also do not consider the interest migration of users in the click sequence.

当用户与平台互动时,他们可能会产生一些与其实际需求不一致的行为。例如,用户可能会不小心点击一些他们不感兴趣的内容,或者在特定情况下生成异常行为数据,这会给点击序列带来噪声,可能会混淆推荐模型。大多数现有方法对序列中物品的权重进行降噪。这些方法不仅过于简单,不能直接应用于所有降噪场景,而且也没有考虑点击序列中用户的兴趣迁移。

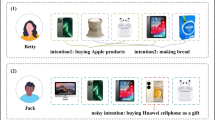

For example, in the following click sequence, the user initially clicked on several e-books related to programming and data science, indicating that the user is interested in programming and technical content. Subsequently, the user clicked on headphones and mobile phones, indicating that the user may have a temporary interest in electronic products. Next, the user returned to clicking on technical e-books. Finally, the user clicked on furniture, which may be due to the need for daily necessities. From this sequence, it can be seen that the user's interest has shifted between technical books and electronic products and furniture. The user's click behavior does not always follow a single interest trajectory, so if the noise is simply removed based on the weight of the item, it is likely to cause the recommendation system to ignore the user's actual interest in a specific time period. For example, if the system considers the click of electronic products to be noise and removes it, it may ignore the user's temporary demand for electronic products, thereby affecting the comprehensiveness of the recommendation. This example shows that the user's preference is not static and may shift over time and context. In order to better denoise, we need to analyse the transfer trajectory of user interests and identify which clicks are the natural result of interest transfer rather than noise. Based on this, the recommendation system can combine the dynamics of user interest transfer for denoising to ensure that the actual changes in user needs are not ignored simply based on the weight of the item. Therefore, denoising is not just about simply filtering out items that seem “irrelevant”, but about understanding the changing trend of user interests in the sequence. When we delve into models for session-based recommendation, we inevitably encounter a series of challenges that have not yet been fully overcome. The first challenge is that models often fail to fully consider the mixed noisy items in session data when building recommendation systems. These noisy items may come from involuntary operations in user behavior, such as inadvertent clicks on advertisements or unconscious touches on the screen, which do not truly reflect the user's interest preferences, but may sneak into the learning process of the model and cause adverse interference to the practicality of the results. Furthermore, the design focus of most current GAT-based recommendation system models is limited to data association and feature extraction within a single session, but to a certain extent ignores the potential value information and patterns between sessions. In fact, the behavior trajectories of the users in different sessions often contain rich historical preferences, long-term trends and potential needs. If the above information can be effectively integrated into the model, it will undoubtedly significantly improve the personalization and accuracy of recommendations. Therefore, how to break the barriers between the sessions and cleverly integrate information between sessions has become one of the key issues in GAT model.

例如,在以下点击序列中,用户最初点击了几个与编程和数据分析相关的电子书,表明用户对编程和技术内容感兴趣。随后,用户点击了耳机和手机,表明用户可能对电子产品有临时兴趣。接下来,用户又回到了点击技术电子书。最后,用户点击了家具,这可能是由于对日常必需品的需要。从这个序列中可以看出,用户的兴趣在技术书籍、电子产品和家具之间有所转移。用户的点击行为并不总是遵循单一的兴趣轨迹,因此如果仅仅根据物品的权重去除噪声,很可能会使推荐系统忽略用户在特定时间段内的实际兴趣。例如,如果系统将电子产品的点击视为噪声并去除它,可能会忽略用户对电子产品的临时需求,从而影响推荐的全面性。 此示例表明用户的偏好并非静态,可能会随时间和情境变化。为了更好地降噪,我们需要分析用户兴趣的转移轨迹,并识别哪些点击是兴趣转移的自然结果而非噪声。基于此,推荐系统可以结合用户兴趣转移的动态进行降噪,以确保不会仅仅根据物品的权重而忽略用户需求的实际变化。因此,降噪不仅仅是简单地过滤掉看似“无关”的物品,而是理解用户兴趣序列中变化的趋势。当我们深入研究基于会话的推荐模型时,不可避免地会遇到一系列尚未完全克服的挑战。第一个挑战是,在构建推荐系统时,模型往往未能充分考虑会话数据中的混合噪声项。 这些噪声项可能来自用户行为中的非自愿操作,例如不小心点击广告或无意识地触摸屏幕,这些并不真正反映用户的兴趣偏好,但可能会悄悄进入模型的训练过程,对结果的实用性造成不利干扰。此外,大多数基于 GAT 的推荐系统模型的设计重点局限于单次会话内的数据关联和特征提取,但在一定程度上忽略了会话之间的潜在价值信息和模式。实际上,用户在不同会话中的行为轨迹往往包含丰富的历史偏好、长期趋势和潜在需求。如果上述信息能够有效地整合到模型中,无疑将显著提高推荐的个性化和准确性。因此,如何打破会话之间的障碍,巧妙地整合会话之间的信息,已成为 GAT 模型的关键问题之一。

Faced with this challenge, we have ingeniously designed a denoising dual sparse graph attention model for session-based recommendation, which deeply integrates the interaction modes within and between the sessions, and innovatively proposes to use the sparse graph attention mechanism for dual-layer denoising, especially optimized for capturing user intent. The core of this model is to use a dual-layer denoising mechanism of two perspectives and a sparse graph attention network, which can not only effectively capture the interest migration within the session, but also accurately grasp the potential correlation between the sessions, and effectively enhance the session representation, thereby making accurate session recommendations. The contributions of this paper are as follows:

面对这一挑战,我们巧妙地设计了一种用于基于会话推荐的降噪双稀疏图注意力模型,该模型深度整合了会话内部及会话之间的交互模式,并创新性地提出使用稀疏图注意力机制进行双层降噪,特别优化以捕捉用户意图。该模型的核心是采用双视角和稀疏图注意力网络的双层降噪机制,不仅能有效捕捉会话内的兴趣迁移,还能准确把握会话之间的潜在相关性,并有效增强会话表示,从而实现准确的会话推荐。本文的贡献如下:

-

Denoising dual sparse graph attention: This model enhances the sparse graph attention network by utilizing a self-attention mechanism, allowing for an in-depth analysis of item dependencies during the denoising process. It emphasizes the consistency and evolving patterns within sessions, thereby significantly boosting the model's capacity to represent session data effectively.

去噪双稀疏图注意力:该模型通过利用自注意力机制增强了稀疏图注意力网络,允许在去噪过程中深入分析项目依赖关系。它强调了会话中的一致性和演变模式,从而显著提高了模型有效表示会话数据的能力。 -

Dual-graph mechanism and session enhancement component: The introduction of the session relation graph provides a powerful tool for modeling complex dependencies between sessions, capturing the rich features of the session at two levels. The addition of the session enhancement component further improves the ability to understand and predict the current session intent, and together promotes a significant improvement in recommendation accuracy.

双图机制和会话增强组件:会话关系图的出现为建模会话之间的复杂依赖关系提供了一个强大的工具,捕捉会话在两个层面的丰富特征。会话增强组件的添加进一步提高了理解和预测当前会话意图的能力,共同促进了推荐精度的显著提升。 -

We carried out a comprehensive series of experiments across three different datasets, and the findings reveal that this model outperforms the current state-of-the-art (SOTA) model by a considerable margin.

我们在三个不同的数据集上进行了全面的一系列实验,结果表明,该模型在性能上明显优于当前最先进(SOTA)模型。

2 Related works 2 相关工作

In this section, we will discuss traditional methods, graph attention-based methods, graph neural network-based methods, and denoising methods in session-based recommendation.

在本节中,我们将讨论基于会话推荐的经典方法、基于图注意力方法、基于图神经网络方法和去噪方法。

2.1 Traditional methods 2.1 传统方法

Recognizing the significance of sequentiality in session data, researchers have employed Markov Chains (MC) [5] to tackle sequential modeling in session-based recommendations (SBR). They transformed the current session into a Markov chain, using past interactions to forecast the user's next actions. For instance, Rendle introduced FPMC [6], which utilized a hybrid method merging matrix factorization with Markov chain analysis to identify user intent for recommendations. The FPMC approach adjusted to SBR by excluding the latent user representation, which is typically unavailable for session-based recommendations. While MC-based methods concentrated on modeling the transitions between two consecutive items, this approach represented these transitions as a graph, effectively capturing the natural order of item transitions in SBR. Later adaptations and extensions of this technique, including the integration of temporal and spatial data, enhanced the performance of SBR models. There has been a notable increase in the use of recurrent neural network (RNN) techniques for modeling sequential patterns in SBR tasks. One of the groundbreaking approaches was introduced by Hidasi with GRU4REC [7], which utilized a multi-layer gated recurrent unit (GRU) architecture to analyze sequences of item interactions. Following this, Tan [8] enhanced the GRU4REC framework by integrating data augmentation methods. Li [9] developed NARM, which added an attention mechanism, improving the representation of item transition patterns in SBR. ISLF [10] took into account the evolving interests of users by combining variational autoencoders with RNNs to capture sequential behavior features effectively. MCPRN [11] presented a hybrid channel model to address the diverse purposes within individual sessions in SBR. However, RNN-based techniques primarily focused on representing the sequential progression between consecutive items to infer user preferences based on time series [12]. As a result, they face difficulties in effectively capturing complex transition patterns, especially those involving non-consecutive items.

认识到会话数据中序列性的重要性,研究人员已经采用马尔可夫链(MC)[5]来解决基于会话推荐(SBR)中的序列建模问题。他们将当前会话转化为马尔可夫链,利用过去的交互来预测用户的下一步动作。例如,Rendle 引入了 FPMC [6],该算法结合了矩阵分解和马尔可夫链分析,以识别推荐中的用户意图。FPMC 方法通过排除通常在基于会话推荐中不可用的潜在用户表示来适应 SBR。虽然基于 MC 的方法专注于建模两个连续项目之间的转换,但这种方法将这些转换表示为图,有效地捕捉了 SBR 中项目转换的自然顺序。后来对这种技术的改进和扩展,包括整合时间和空间数据,提高了 SBR 模型的表现。在 SBR 任务中,循环神经网络(RNN)技术在建模序列模式方面的使用显著增加。 Hidasi 提出了一个开创性的方法,即 GRU4REC [7],该方法利用多层门控循环单元(GRU)架构来分析物品交互序列。随后,Tan [8] 通过集成数据增强方法增强了 GRU4REC 框架。Li [9] 开发了 NARM,其中添加了注意力机制,改善了 SBR 中物品转换模式的表示。ISLF [10] 通过结合变分自编码器和 RNN 来捕获序列行为特征,考虑了用户兴趣的演变。MCPRN [11] 提出了一种混合通道模型,以解决 SBR 中单个会话内的多样化目的。然而,基于 RNN 的技术主要关注表示连续物品之间的序列进展,以根据时间序列推断用户偏好 [12]。因此,它们在有效捕捉复杂转换模式方面面临困难,尤其是涉及非连续物品的模式。

2.2 Graph attention-based methods

2.2 基于图注意力方法

Liu [13] introduced STAMP, which utilizes attention mechanisms to capture users’ current interests, moving away from traditional recurrent neural networks. It emphasized the significance of the last click through their attention-based approaches. These techniques illustrated the critical role of understanding user behavior in session-based recommendations by integrating attention mechanisms to gather pertinent information. Inspired by the Transformer [14] architecture, SASRec [15] adopted a stacking method to assess item relevance in session-based contexts. Furthermore, CoSAN [16] utilized neighborhood data to form collaborative representations, enhancing dynamic projective representations. To effectively address users’ interests, various models have been introduced, including Ying’s two-layer model [17] and He’s Locker model [18]. These models leverage self-attention mechanisms to effectively capture long-term preferences, while short-term preferences are addressed using a local encoder. The integration of attention mechanisms within session-based recommendation (SBR) systems has significantly enhanced recommendation accuracy and improved the capacity to model the dynamic evolution of user preferences, paving the way for more advanced SBR approaches.

刘[13]介绍了 STAMP,该模型利用注意力机制来捕捉用户的当前兴趣,摆脱了传统的循环神经网络。它强调了通过基于注意力的方法关注最后点击的重要性。这些技术通过整合注意力机制来收集相关信息,展示了在基于会话的推荐中理解用户行为的关键作用。受 Transformer[14]架构的启发,SASRec[15]采用堆叠方法来评估会话上下文中的项目相关性。此外,CoSAN[16]利用邻域数据形成协同表示,增强了动态射影表示。为了有效应对用户的兴趣,已经引入了各种模型,包括 Ying 的双层模型[17]和 He 的 Locker 模型[18]。这些模型利用自注意力机制有效地捕捉长期偏好,而短期偏好则通过局部编码器来解决。 将注意力机制整合到基于会话的推荐(SBR)系统中,显著提高了推荐准确性,并增强了建模用户偏好动态演化的能力,为更高级的 SBR 方法铺平了道路。

2.3 Graph neural network-based methods

2.3 基于图神经网络的算法

Numerous studies have employed GNN-based models to derive item representations for session-based recommendations (SBR) from graphs generated by the current session. Wu presented the SRGNN [19] framework, which utilizes gated graph neural networks (GGNN) to extract item information from session graph. This model creates compressed session embeddings by integrating individual item embeddings through an attention mechanism, where the attention weight is influenced by the relevance of each item to its predecessor. Building on the success of SRGNN, various adaptations have emerged for SBR, including GCSAN [20]. Drawing inspiration from SRGNN, Yu introduced TAGNN [21], which implements target-aware attention to highlight different facets of user behavior based on the target item, enabling adaptive activation of diverse user interests. Qiu's FGNN [22] employs a multi-head attention mechanism to aggregate neighbor embeddings and combines learned embeddings iteratively according to their relevance to the session, ultimately forming the session representation. GCE-GNN [23] constructs session representations by combining insights from both the session-level and global perspectives. It employs a session-aware attention mechanism to enhance global graph node learning, achieved through the recursive aggregation of neighboring node embeddings within the global graph. Additionally, to capture item correlations within context windows of varying sizes for each session. Wang’s SHARE [24] concurrently models multiple item correlations using sliding windows alongside a hypergraph structure to encapsulate contextual information, while taking into account different-sized context windows to better understand sessions and extract user intent. Li introduced HIDE [25], a framework that analyzes transitions from multiple perspectives and constructs various hypergraphs. This approach captures high-order interest transitions through hypergraphs and employs intent-aware attention convolution on each session hypergraph, enhancing the separation of distinct user intentions via two specific tasks. Additionally, MGIR [26] improved session representation by offering a novel method grounded in multi-aspect global item relation learning, which encodes different relationships through various layers and creates enriched session embeddings by integrating both positive and negative relationships. Furthermore, Li [27] differentiated between overt and subtle associations among items, presenting an adaptive GNN that independently extracts features. This significantly boosts performance by allowing the graph structure to evolve dynamically during model training, adapting to shifting user preferences. Within GNN-based SBR models, the multi-graph approach proved more advantageous than the single-graph model, encouraging the adoption of multi-graphs for session modeling in the proposed framework.

众多研究已采用基于 GNN 的模型从当前会话生成的图中推导出会话推荐(SBR)的项目表示。Wu 提出了 SRGNN [19]框架,该框架利用门控图神经网络(GGNN)从会话图中提取项目信息。该模型通过整合单个项目嵌入并通过注意力机制创建压缩的会话嵌入,其中注意力权重受每个项目与其前驱的相关性影响。在 SRGNN 成功的基础上,SBR 出现了各种改进,包括 GCSAN [20]。受到 SRGNN 的启发,Yu 引入了 TAGNN [21],该模型实现目标感知注意力,根据目标项目突出用户行为的不同方面,从而实现不同用户兴趣的适应性激活。Qiu 的 FGNN [22]采用多头注意力机制来聚合邻居嵌入,并根据它们与会议的相关性迭代地结合学习到的嵌入,最终形成会话表示。GCE-GNN [23]通过结合会话级和全局视角的见解来构建会话表示。 它采用会话感知的注意力机制来增强全局图节点学习,通过在全局图中递归聚合相邻节点嵌入来实现。此外,为了捕捉每个会话中不同大小上下文窗口内的项目相关性。王在 SHARE [24]中同时使用滑动窗口和超图结构来封装上下文信息,同时考虑不同大小的上下文窗口,以更好地理解会话和提取用户意图。李引入了 HIDE [25],这是一个从多个角度分析转换并构建各种超图的框架。这种方法通过超图捕捉高阶兴趣转换,并在每个会话超图上使用意图感知的注意力卷积,通过两个特定任务增强不同用户意图的分离。 此外,MGIR [26] 通过提供一种基于多方面全局物品关系学习的新方法来改进会话表示,通过不同层编码不同的关系,并通过整合正负关系创建丰富的会话嵌入。此外,Li [27] 区分了物品之间的显性和微妙关联,提出了一种自适应 GNN,该 GNN 独立提取特征。这通过允许图结构在模型训练期间动态演变,适应不断变化的用户偏好,显著提升了性能。在基于 GNN 的 SBR 模型中,多图方法比单图模型更有优势,鼓励在所提出的框架中采用多图进行会话建模。

2.4 Denoising methods 2.4 去噪方法

Graph neural network (GNN) approaches face challenges in effectively capturing sequential information within a session, particularly regarding repeated nodes and the initial nodes of directed graphs. Zhang introduced SEDGN [28], which improves the modeling of sequential information by incorporating a denoising module to address the inherent noise. This model features two denoising modules designed to extract the user's typical preference from the graph data, thereby reducing the noise's effect within the session. While some researchers attempted to minimize noisy signals by assigning lower weights to less significant items, this strategy often still resulted in substantial noise accumulation, impeding the ability to accurately reflect user intent. Dai developed DGNN [29], which incorporates adaptive thresholds, enabling each node to manage varying levels of noise, thus enhancing the model's denoising capabilities. Specifically, this method establishes an adaptive threshold based on graph neural networks to withstand different noise levels. Hua proposed IDGNN [30], which sought to overcome the shortcomings of previous methods by capturing hidden user intent and filtering out noisy signals in session sequences. This approach considers noise impacts and introduces a relative ranking method along with a denoising threshold to dynamically filter out irrelevant user intent. Nonetheless, these methods often overlooked the evolving nature of user intent, leading to inaccurate denoising of the interests of users whose preferences had shifted.

图神经网络(GNN)方法在有效捕捉会话中的顺序信息方面面临挑战,尤其是在处理有向图的重复节点和初始节点时。张引入了 SE-DGN [28],通过引入去噪模块来提高顺序信息的建模,从而解决固有的噪声问题。该模型具有两个去噪模块,旨在从图数据中提取用户的典型偏好,从而减少会话中噪声的影响。虽然一些研究人员试图通过给不太重要的项目分配较低的权重来最小化噪声信号,但这种策略通常仍然会导致大量的噪声积累,阻碍了准确反映用户意图的能力。戴开发了 DGNN [29],该模型引入了自适应阈值,使每个节点能够管理不同水平的噪声,从而增强了模型的去噪能力。具体来说,这种方法基于图神经网络建立了一个自适应阈值,以承受不同的噪声水平。 华提出了 IDGNN [30],该方法试图通过捕捉隐藏的用户意图并过滤会话序列中的噪声信号来克服先前方法的不足。这种方法考虑了噪声的影响,并引入了一种相对排名方法和去噪阈值,以动态地过滤掉不相关的用户意图。尽管如此,这些方法往往忽略了用户意图的演变性质,导致对偏好发生变化的用户兴趣的去噪不准确。

3 Preliminaries 3 预备知识

3.1 Problem statement 3.1 问题陈述

The session set is denoted as , where m represents the total length of the session. All the item sets in the dataset are denoted as , where n denotes the total number of items. Every session is made by the interaction in an anonymous user’s click. In this context, we define a click sequence as , where represents the length of the click sequence. Therefore, the goal of our work is to predict the item clicked in the time based on the previous clicks.

会话集表示为 ,其中 m 代表会话的总长度。数据集中的所有项目集表示为 ,其中 n 表示项目总数。每个会话由匿名用户的点击交互构成。在这种情况下,我们定义点击序列为 ,其中 表示点击序列的长度。因此,我们工作的目标是根据之前的 次点击预测第 次点击的项目。

3.2 Sparse attention 3.2 稀疏注意力

In the traditional attention mechanism framework, the softmax function is the core component, which is used to assign a normalized probability weight to each item in the input sequence. Since the returned values are all positive, such a non-zero probability will assign weights to irrelevant data, and this process often does not consider the direct correlation between each element and the user’s actual intention or task goal, thus affecting the recommendation ability. As the attention levels continue to stack, even non-critical information that is initially assigned a small weight may gradually amplify due to the cumulative effect of weights, eventually causing the model to deviate when generating recommendations or making predictions.

在传统的注意力机制框架中,softmax 函数是核心组件,用于将归一化的概率权重分配给输入序列中的每个项目。由于返回的值都是正数,这种非零概率会将权重分配给无关数据,并且这个过程通常不考虑每个元素与用户的实际意图或任务目标之间的直接相关性,从而影响推荐能力。随着注意力级别的持续叠加,最初分配了较小权重的非关键信息也可能由于权重的累积效应而逐渐放大,最终导致模型在生成推荐或进行预测时发生偏差。

To overcome this limitation, the sparsemax algorithm was introduced, which innovatively assigns zero weights to items that are not directly related to the task, thereby achieving sparse attention distribution. This mechanism effectively reduces the interference of noise information and the model focus more on key message that makes a substantial contribution to the task. The sparsemax is as follows:

为了克服这一限制,引入了 sparsemax 算法,该算法创新性地将零权重分配给与任务无直接关系的项目,从而实现稀疏注意力分布。这种机制有效地减少了噪声信息的干扰,使模型更专注于对任务有实质性贡献的关键信息。sparsemax 如下:

Based on sparsemax, Correia [31] further proposed a sparse attention mechanism based on entmax in 2019. This mechanism not only inherits the sparse advantage of sparsemax, but also introduces an adjustable entropy parameter, which enables the model to adaptively adjust the sparsity according to the specific characteristics of the data, thereby further improving the accuracy and reliability of the prediction while maintaining the validity of the information. The entmax is as follows:

基于 sparsemax,Correia [31]于 2019 年进一步提出了一种基于 entmax 的稀疏注意力机制。该机制不仅继承了 sparsemax 的稀疏优势,还引入了一个可调整的熵参数,这使得模型能够根据数据的特定特征自适应地调整稀疏性,从而进一步提高预测的准确性和可靠性,同时保持信息的有效性。entmax 如下:

From Eq. 2, it's evident that the softmax function corresponds to 1-entmax, while sparsemax is equivalent to 2-entmax. When α lies between 1 and 2, the function generates sparse probability distributions with smoother transitions. In this study, to better filter out irrelevant information within a session, we substitute the transformation function in the attention mechanism with α-entmax.

从公式 2 中可以看出,softmax 函数对应于 1-entmax,而 sparsemax 对应于 2-entmax。当α位于 1 和 2 之间时,该函数生成具有更平滑过渡的稀疏概率分布。在本研究中,为了更好地过滤会话中的无关信息,我们将注意力机制中的变换函数替换为α-entmax。

Moreover, prior studies [32] implemented sparse attention in session-based recommendations, presenting an adaptive sparse attention mechanism tailored to session data. This approach focused on concentrating crucial information, allowing the identification of potentially unrelated items within the session while preserving greater weights for those items that are more relevant.

此外,先前研究[32]在基于会话的推荐中实现了稀疏注意力机制,提出了一种针对会话数据的自适应稀疏注意力机制。这种方法侧重于集中关键信息,允许在会话中识别出可能无关的项目,同时为更相关的项目保留更大的权重。

4 Method 4 方法

4.1 Graph construction 4.1 图构建

Based on previous research [33], we model graph structures from two perspectives:

基于先前研究[33],我们从两个角度对图结构进行建模:

Constructing the session graph : To represent the transitions in session data, we construct a session graph that models the sequence of item clicks. This graph is directed, with each edge indicating the direction of transitions based on the click order between items. Each node corresponds to a unique item within the session. To account for varying transition relationships, we assign a weight to each edge, calculated as the frequency of the transition divided by the out-degree of the starting node. This weighting scheme ensures that transitions with greater significance are assigned higher weights. The adjacency matrix is formed by concatenating the in-edge and out-edge weight matrices.

构建会话图 :为了表示会话数据中的转换,我们构建一个会话图来模拟项目点击的序列。这个图是定向的,每条边都指示了基于项目点击顺序的转换方向。每个节点对应会话中的一个唯一项目。为了考虑不同的转换关系,我们给每条边分配一个权重,该权重是转换频率除以起始节点的出度。这种加权方案确保了具有更大重要性的转换被分配更高的权重。邻接矩阵是通过连接入边和出边权重矩阵形成的。

Constructing the relation graph : To effectively leverage the useful information shared across sessions, the model builds a session relationship graph. In this graph, each node corresponds to a session. When two sessions share common items, their respective nodes are linked. The strength of the connection between two sessions is determined by the percentage of shared items, with a higher proportion indicating a stronger relationship.

构建关系图 :为了有效地利用会话之间共享的有用信息,该模型构建了一个会话关系图。在这个图中,每个节点对应一个会话。当两个会话共享共同的项目时,它们各自的节点被连接起来。两个会话之间的连接强度由共享项目的百分比决定,比例越高表示关系越强。

Our proposed model is shown in Fig. 1.

我们的模型如图 1 所示。

4.2 Initial embedding layer

4.2 初始嵌入层

As user preferences evolve based on factors like price, brand, or other attributes, these shifts can influence how frequently items are clicked. To handle this, we propose an adaptable frequency-based position encoding, building on traditional position encoding methods to better capture the positional significance and relevance of items. This representation is integrated with the item representations via the initial embedding layer. The initial latent representation can be expressed as:

随着用户偏好根据价格、品牌或其他属性等因素演变,这些变化可能会影响物品被点击的频率。为了应对这种情况,我们提出了一种自适应的基于频率的位置编码,该编码基于传统位置编码方法,以更好地捕捉物品的位置重要性和相关性。这种表示通过初始嵌入层与物品表示相结合。初始潜在表示可以表示为:

where denotes the node embedding in the adjacency matrix of the session graph , and represents the position and frequency message of the node in the session graph. By integrating item embed dings with frequency data, we can enhance session-level feature representation, providing deeper insights into users' evolving interest patterns and dynamic preference shifts over time.

其中 表示会话图 的邻接矩阵中的节点嵌入,而 代表节点在会话图中的位置和频率信息。通过将物品嵌入与频率数据相结合,我们可以增强会话级别的特征表示,从而更深入地了解用户随时间变化的兴趣模式和动态偏好转变。

One drawback of graph neural networks (GNNs) is that, while adding more layers improves model's capacity to get higher-order information and leads to over-smoothing. Previous research has shown that self-attention mechanisms are effective in mitigating the issue of long-distance dependencies in sequences by computing the relationships between items. Based on this, we incorporate the graph attention to strengthen the GNN layer's ability to capture long-range dependencies. In particular, we utilize GGNN as the base encoder and apply GAT to aggregate global information.

图神经网络(GNNs)的一个缺点是,虽然增加更多层可以提高模型获取高阶信息的能力,但会导致过平滑。先前的研究表明,自注意力机制通过计算项目之间的关系,在缓解序列中的长距离依赖问题上非常有效。基于此,我们引入图注意力来增强 GNN 层捕捉长距离依赖的能力。特别是,我们利用 GGNN 作为基础编码器,并应用 GAT 来聚合全局信息。

Considering the global information and session representation at different time points, according to previous researches [34, 35], we use the sequence model GRU to learn the information of each node in , and we combining GNN and GRU properties as follows:

考虑到不同时间点的全局信息和会话表示,根据先前的研究[34, 35],我们使用序列模型 GRU 来学习 中每个节点的信息,并将 GNN 和 GRU 的特性结合如下:

Among them, denotes the number of layers of GNN, denotes the two adjacency matrix input matrix and output matrix of the aggregate graph, , . is the parameter controlling the weight, and are the reset gate and update gate respectively, , . is bias. denotes the items in the current session. is the sigmoid function, and represents vector element-wise multiplication.

其中, 表示 GNN 的层数, 表示聚合图的两个邻接矩阵输入矩阵和输出矩阵, , 。 是控制权重的参数, 和 分别是重置门和控制门, , 。 是偏置。 表示当前会话中的项目。 是 sigmoid 函数, 表示向量逐元素乘法。

We compiled the data from all nodes within the current session. Since the importance of the information in these embeddings can vary, we apply an attention mechanism to combine all node information in the session graph, forming the initial global session representation, .

我们从当前会话中的所有节点编译了数据。由于这些嵌入中信息的重要性可能不同,我们应用注意力机制来结合会话图中所有节点的信息,形成初始的全局会话表示 。

Among them, denotes the representation at the last moment of the session, represents the output of GGNN for each moment of the session, and denotes the weights, is the sigmoid function.

其中, 表示会话最后一刻的表示, 代表会话每个时刻 GGNN 的输出, 表示权重, 是 sigmoid 函数。

4.3 Denoising sparse attention in session graph

4.3 会话图中去噪稀疏注意力

Given the research background of the entmax function, we designed a novel multi-head sparse graph attention mechanism. This mechanism achieves in-depth understanding and efficient utilization of complex data structures by processing information from different feature subspaces in parallel.

鉴于 entmax 函数的研究背景,我们设计了一种新颖的多头稀疏图注意力机制。该机制通过并行处理来自不同特征子空间的信息,实现了对复杂数据结构的深入理解和高效利用。

Specifically, the adaptive sparse attention denoising mechanism we proposed contains four core steps: First, unlike the traditional self-attention mechanism, we introduced a linear projection transformation in the processing of the query (Q). This design enables our self-attention mechanism to handle the relations between Q, K and V more flexibly, which not only enhances the ability to distinguish different sources, but also promotes reasonable trade-offs and optimization in the process of information integration. Through this series of innovative designs, our multi-head sparse graph attention mechanism can more accurately capture key information and suppress noise interference in complex and changing data environments, thereby providing users with more accurate and reliable prediction results. And then calculate the parameter needed in the sparse attention mechanism, which controls the sparsity. The attention score is calculated through the multi-head sparse attention mechanism as follows:

具体来说,我们提出的自适应稀疏注意力降噪机制包含四个核心步骤:首先,与传统自注意力机制不同,我们在查询(Q)的处理中引入了线性投影变换。这种设计使我们的自注意力机制能够更灵活地处理 Q、K 和 V 之间的关系,这不仅增强了区分不同来源的能力,还促进了信息集成过程中的合理权衡和优化。通过这一系列创新设计,我们的多头稀疏图注意力机制能够更准确地捕捉关键信息并抑制复杂和变化数据环境中的噪声干扰,从而为用户提供更准确可靠的预测结果。然后计算稀疏注意力机制中需要的参数 ,该参数控制稀疏度。通过多头稀疏注意力机制计算注意力分数如下:

where , denotes sigmoid function, is weight matrix.

其中 , 表示 sigmoid 函数, 是权重矩阵。

Afterwards, in order to prevent gradient vanishing and overfitting, we added a feedforward neural network and residual structure, and applied dropout to the hidden layer representation to obtain more robust target node representation and hidden layer representation as follows:

之后,为了防止梯度消失和过拟合,我们添加了一个前馈神经网络和残差结构,并将 dropout 应用于隐藏层表示,以获得更鲁棒的目标节点表示和隐藏层表示,如下所示:

where , are weight matrix, , are biases.

其中, 、 是权重矩阵, 、 是偏置。

Second step, after obtaining the representation of the target node, we perform a denoising operation on the previously learned global session representation as follows:

第二步,在获得目标节点的表示后,我们对之前学习的全局会话表示 进行去噪操作,如下所示:

where denotes the -layer denoising, , is mean value of , is weight matrix. In this paper, . To prevent overfitting, dropout layers are commonly applied. If overfitting happens with other datasets, you can introduce batch normalization (BN) layers, fine-tune the learning rate, and implement early stopping as additional measures to address the issue.

其中, 表示 层去噪, 、 是 的平均值, 是权重矩阵。在本文中, 。为了防止过拟合,通常应用 dropout 层。如果其他数据集出现过拟合,可以引入批量归一化(BN)层,微调学习率,并实施提前停止作为解决该问题的额外措施。

Third, we also utilize the self-attention mechanism to denoise, this makes the obtained global features sparsely. We add the target node representation to the sparse attention network, which get a representation that is more related to the target node.

第三,我们还利用自注意力机制进行去噪,这使得获得的全局特征更加稀疏。我们将目标节点表示添加到稀疏注意力网络中,从而获得与目标节点更相关的表示。

where , represent the weight matrix, are biases, denotes the attention score, and denotes the initial global embedding, is the sigmoid function.

其中 、 代表权重矩阵, 是偏置, 表示注意力分数, 表示初始全局嵌入, 是 sigmoid 函数。

We create a detailed session representation by integrating insights from both the global and target viewpoints.

我们通过整合全局和目标视角的见解,创建一个详细的会话表示。

where represents the weight matrix and represents the bias.

其中 代表权重矩阵, 代表偏置。

4.4 Denoising sparse attention in relation graph

4.4 关系图中稀疏注意力去噪

To maximize the utilization of features within the relation graph, we introduce a second sparse graph attention denoising module. This module not only extracts meaningful insights about the target node from the relation graph but also detects collaborative session data from other sessions that share similar interests with the current one in the subsequent module.

为了最大化利用关系图中的特征,我们引入第二个稀疏图注意力降噪模块。该模块不仅从关系图中提取关于目标节点的有意义的见解,还在后续模块中检测与其他会话共享相似兴趣的协作会话数据。

Initially, we employ the average embeddings of items within a session to establish the preliminary relation representation. We then enhance this initial session relation representation using a graph convolution network. Our approach involves approximating a relation matrix through the Laplacian mapping method, outlined as follows:

最初,我们使用会话中物品的平均嵌入来建立初步的关系表示。然后,我们使用图卷积网络增强这种初始的会话关系表示。我们的方法涉及通过拉普拉斯映射方法近似关系矩阵,具体如下:

where represents degree matrix, signifies association matrix, and denotes label matrix. means the number of sessions in and is the number of convolutional layers.

其中 代表度矩阵, 表示关联矩阵, 表示标签矩阵。 表示 中的会话数量, 是卷积层的数量。

We then employ a sparse attention mechanism to clean the session representation based on the relationship matrix. Thus, we propose a sparse relational graph attention denoising approach as outlined below:

我们随后采用稀疏注意力机制,根据关系矩阵对会话表示进行清理。因此,我们提出了如下所示的稀疏关系图注意力降噪方法:

where denotes the weight matrix, represents the bias, is the sigmoid function.

其中 表示权重矩阵, 代表偏置, 是 sigmoid 函数。

4.5 Prediction 4.5 预测

This paper introduces an Auxiliary Enhancement Module (AEM) designed to refine and improve the representation of the current session. By utilizing current relation information and session coverage information, AEM identifies the top N sessions that are linked to the current session , which are utilized as comparable candidate sessions . We proceed to calculate the similarity score by comparing the representation of the current session with that of each candidate session. The detailed formula is provided below:

本文介绍了一种辅助增强模块(AEM),旨在优化和改进当前会话的表示。通过利用当前关系信息和会话覆盖信息,AEM 识别与当前会话 相关联的前 N 个会话,这些会话被用作可比候选会话 。然后,我们通过比较当前会话的表示与每个候选会话的表示来计算相似度得分。详细公式如下:

The ultimate embedding of the session combines both the auxiliary embedding and the session embedding. Next, we determine the likelihood of each item being clicked. To assess the model's performance, we utilize cross-entropy loss, which can be expressed with the following formula:

会话的最终嵌入结合了辅助嵌入和会话嵌入。接下来,我们确定每个物品被点击的可能性。为了评估模型性能,我们使用交叉熵损失,其公式如下:

where is item embedding, where is the one-hot encoded representation of the real click, is predictive value.

其中 是物品嵌入,其中 是真实点击的一热编码表示, 是预测值。

5 Experiment 5 实验

5.1 Datasets and Metrics 5.1 数据集和指标

We conducted experiments using three publicly available datasets sourced from real-world platforms: Diginetica, Tmall, and Nowplaying, as detailed in Table 1. The Diginetica dataset, obtained from CIKMCup2016. The Tmall dataset, introduced during the IJCAI-15 competition. The Nowplaying dataset originates from the Music Listening Behavior Database. Similar to previous studies [36], we processed the datasets by removing sessions that were between 1 to 5 interactions long, as they do not yield meaningful insights. We designated the last week of the dataset for testing purposes, while the remainder served as the training set. To assess the effectiveness of our Top-N recommendations, we utilized two commonly employed metrics: Precision@N (P@N) and Mean Reciprocal Rank@N (MRR@N), with N set to 20.

我们使用三个公开数据集进行了实验,这些数据集来自现实平台:Diginetica、Tmall 和 Nowplaying,具体信息如表 1 所示。Diginetica 数据集来自 CIKMCup2016。Tmall 数据集在 IJCAI-15 竞赛中介绍。Nowplaying 数据集来源于音乐收听行为数据库。与先前研究[36]类似,我们通过删除 1 到 5 次交互之间的会话来处理数据集,因为这些会话不会产生有意义的见解。我们将数据集的最后一周用于测试目的,其余部分作为训练集。为了评估我们的 Top-N 推荐的有效性,我们使用了两个常用的指标:Precision@N(P@N)和 Mean Reciprocal Rank@N(MRR@N),其中 N 设置为 20。

表 1 数据集统计

5.2 Baselines 5.2 基准

-

POP: One common approach in recommender systems is to select the top N most frequently occurring items from the training dataset, which serves as a baseline method.

POP:推荐系统中的一种常见方法是选择训练数据集中出现频率最高的前 N 个物品,这作为了一种基线方法。 -

Item-KNN [37]: It employs cosine similarity as a measure to suggest items that closely resemble the one previously clicked during the session.

Item-KNN [ 37]:它采用余弦相似度作为度量标准,以推荐与会话中之前点击的物品相似的物品。 -

FPMC: By integrating matrix factorization with a Markov chain, the model effectively captures both sequential dynamics and individual user preferences.

FPMC:通过将矩阵分解与马尔可夫链集成,该模型有效地捕捉了序列动态和个体用户偏好。 -

GRU4Rec: It uses GRU to represent user sequences.

GRU4Rec:它使用 GRU 来表示用户序列。 -

NARM: It improves GRU4Rec by incorporating the attention mechanism.

NARM:它通过引入注意力机制来改进 GRU4Rec。 -

SR-GNN: It employs a GGNN layer to generate item embeddings, followed by the application of self-attention to the final item.

SR-GNN:它使用 GGNN 层生成项目嵌入,然后对最终项目应用自注意力。 -

FGNN: It primarily creates session-level representations for recommendations by understanding the underlying sequence of items.

FGNN:它主要通过理解项目的基本序列来创建会话级别的推荐表示。 -

CSRM [38]: It uses a memory network to check the most recent m sessions to improve the accuracy of user intent prediction.

CSRM [ 38]:它使用记忆网络来检查最近的 m 个会话,以提高用户意图预测的准确性。 -

GCE-GNN: It enhances the global context, aiming to simultaneously obtain global and session-level item representations to enhance recommendation performance.

GCE-GNN:它增强了全局上下文,旨在同时获得全局和会话级别的物品表示,以增强推荐性能。 -

MTD [39]: This model is based on multi-level item transition dynamics, inherently capturing the hierarchical patterns of item transitions within and between sessions.

MTD [ 39]:该模型基于多级物品转换动态,本质上捕捉了物品在会话内部以及会话之间的层次转换模式。 -

S2-DHCN [40]: This approach creates two hypergraphs to encapsulate both inter-session and additional information, employing self-supervised learning to boost its performance.

S2-DHCN [ 40]:这种方法创建了两个超图来封装跨会话和附加信息,采用自监督学习来提升其性能。 -

CORE [41]: It creates an encoder that maintains consistent representations by utilizing a linear combination of input item embeddings to form session embeddings.

CORE [ 41]:它创建了一个编码器,通过利用输入物品嵌入的线性组合来形成会话嵌入,从而保持一致的表示。 -

HIDE: It examines dynamic shifts from various viewpoints, builds several hypergraphs, and interprets intentions on both micro and macro levels.

隐藏:它从多个角度考察动态变化,构建多个超图,并在微观和宏观层面解释意图。 -

Atten-Mixer [42] It proposes to remove the GNN propagation part, which leverages both concept-view and instanceview readouts to achieve multi-level reasoning over item transitions.

Atten-Mixer [42]:它提出移除 GNN 传播部分,利用概念视图和实例视图的读出,以实现对项目转换的多级推理。 -

TASI-GNN [43]: It combines GNN with sparse graph attention to reduce noise in session data.

TASI-GNN [43]:它将 GNN 与稀疏图注意力结合,以减少会话数据中的噪声。

5.3 Experimental settings

5.3 实验设置

To optimize the internal session information, we set the batch size to 512, hidden size to 100, epochs to 30, the number of heads in the multi-head attention mechanism to 5, and all experiments were conducted on RTX 3090 GPU. Other parameters are based on prior research, with a dimensionality of 100 and a single layer in the GGNN. The model employs the Adam optimizer, with an initial learning rate of 0.001 that decreases by a factor of 0.1 every three training epochs. Additionally, an L2 regularization penalty of 10–5 is applied.

为了优化内部会话信息,我们将批量大小设置为 512,隐藏层大小设置为 100,训练轮次设置为 30,多头注意力机制中的头数设置为 5,所有实验均在 RTX 3090 GPU 上运行。其他参数基于先前研究,GGNN 的维度为 100,单层结构。模型采用 Adam 优化器,初始学习率为 0.001,每三个训练轮次减少 10 倍。此外,还应用了 10 倍的 L2 正则化惩罚。

5.4 Experimental results 5.4 实验结果

Table 2 presents the empirical findings from 15 baseline models alongside our novel method, evaluated on three real-world datasets. The top-performing results in each category are indicated in bold, while the second-best results are underlined. Notably, DDSG consistently surpasses all other models across both metrics on the three datasets, confirming the effectiveness of our proposed approach. Figure 2 visually illustrates the advantages of top data in two metrics, this model versus the baseline model.

表 2 展示了 15 个基准模型与我们的新方法在三个真实世界数据集上的实证发现。每个类别中的最佳结果用粗体表示,而次佳结果用下划线表示。值得注意的是,DDSG 在这三个数据集的两种指标上均持续超越所有其他模型,证实了我们所提出方法的有效性。图 2 直观地展示了在两个指标中,该模型与基准模型相比的优势。

表 2 总体比较

Among the conventional methods, POP ranks the lowest due to its reliance solely on recommending the top N frequent items. In contrast, FPMC demonstrates better performance on all three datasets by employing Markov chains and matrix factorization techniques. Item-KNN performs best on the Diginetica and Nowplaying datasets, although it focuses only on item similarity without accounting for the sequence of items within sessions, limiting its ability to capture item transitions. Generally, neural network-based approaches yield superior results in session-based recommendations compared to traditional methods. While GRU4Rec does not outshine Item-KNN on Diginetica, it remains the pioneering RNN-based session-based recommendation method, showcasing the potential of RNNs in sequence modeling. NARM incorporates an attention mechanism to better reflect user intent, achieving significant performance improvements and demonstrating the effectiveness of this approach.

在传统方法中,由于仅依赖于推荐前 N 个频繁项目,POP 排名最低。相比之下,FPMC 通过使用马尔可夫链和矩阵分解技术,在所有三个数据集上均表现出更好的性能。Item-KNN 在 Diginetica 和 Nowplaying 数据集上表现最佳,尽管它只关注项目相似性,而没有考虑到会话中项目的顺序,限制了其捕捉项目转换的能力。一般来说,基于神经网络的方案在基于会话的推荐中比传统方法产生更优的结果。虽然 GRU4Rec 在 Diginetica 上没有超越 Item-KNN,但它仍然是开创性的基于 RNN 的会话推荐方法,展示了 RNN 在序列建模中的潜力。NARM 通过引入注意力机制来更好地反映用户意图,实现了显著的性能提升,并证明了这种方法的有效性。

RNNs are primarily tailored for sequence modeling, but session-based recommendation presents complexities beyond simple sequencing, as user preferences can shift during a session. One approach combined with attention mechanisms to capture the dynamic nature of user interests within their click sequences, significantly advancing session-based recommendation methods. Conversely, RNNs struggle to leverage insights beyond the transition patterns, but graph neural networks can effectively address these limitations.

RNNs 主要针对序列建模,但基于会话的推荐在简单的序列之外还呈现复杂性,因为用户偏好可能会在会话中发生变化。一种结合注意力机制的方法被用来捕捉用户在其点击序列中兴趣的动态性,显著推进了基于会话的推荐方法。相反,RNNs 难以利用过渡模式之外的洞察,但图神经网络可以有效地解决这些限制。

SR-GNN enhanced performance by structuring session information into graph format and leveraging GNN to understand its relationships. FGNN utilized WGAT to effectively manage this data, while CSRM employed an attention mechanism to grasp session information. Both models highlight the importance of session-level insights. Unlike CORE, which refrains from applying nonlinear layers to item embeddings for session encoding, it instead learns specific weights for every item and uses a weighted sum to create consistent session and item embeddings. These models illustrate the potential of developing supplementary graphs, indicating that integrating additional knowledge beyond the current session may enhance the understanding of users' latent interests.

SR-GNN 通过将会话信息结构化为图格式并利用 GNN 来理解其关系来提升性能。FGNN 利用 WGAT 有效地管理这些数据,而 CSRM 采用注意力机制来把握会话信息。这两个模型都强调了会话级洞察的重要性。与 CORE 不同,CORE 在会话编码时避免对项目嵌入应用非线性层,而是为每个项目学习特定的权重,并使用加权求和来创建一致的会话和项目嵌入。这些模型展示了开发辅助图的可能性,表明将超出当前会话的额外知识整合进来可能增强对用户潜在兴趣的理解。

Moreover, hypergraphs enhance the representation of high-order relationships through hyperedges, enabling connections among multiple nodes. Both DHCN and HIDE investigate intricate transition relationships utilizing hypergraphs. DHCN employs line graphs alongside self-supervised learning to mitigate data sparsity issues, whereas HIDE focuses on encoding high-order interest transitions and categorizing intent based on each item click. HIDE shows substantial improvement over DHCN, highlighting the value of intent information in recommendation systems.

此外,超图通过超边增强了高阶关系的表示,使得多个节点之间能够建立联系。DHCN 和 HIDE 都利用超图来研究复杂的转换关系。DHCN 采用线图和自监督学习相结合的方法来缓解数据稀疏性问题,而 HIDE 则专注于编码高阶兴趣转换,并根据每个项目的点击行为对意图进行分类。HIDE 在性能上对 DHCN 有显著提升,突显了意图信息在推荐系统中的价值。

During the use of graph modeling sessions, researchers can derive both inter-session and intra-session graphs. MTD indicates that focusing solely on intra-session item transitions does not adequately capture the intricate item transitions from both local and global viewpoints. GCE-GNN significantly enhances performance and offers a computationally efficient option for large datasets, as it models session information from both inter-session and intra-session angles.

在图建模会话的使用过程中,研究人员可以推导出会话间和会话内图。MTD 表明,仅仅关注会话内项目转换并不能充分捕捉从局部和全局观点来看的复杂项目转换。GCE-GNN 显著提升了性能,并为大型数据集提供了一个计算效率高的选项,因为它从会话间和会话内两个角度对会话信息进行建模。

Among the baseline methods, GNN-based approaches show superior results on the Diginetica and Nowplaying datasets. By treating each session sequence as a subgraph and employing GNNs to encode items, both SR-GNN and GCE-GNN illustrate the advantages of GNNs in session-based recommendations. This highlights the superiority of graph modeling over sequence modeling, suggesting that using dual graphs to represent session information can extract additional insights and improve session representation. Atten-Mixer achieved impressive performance. This model highlights item co-occurrence associations by dividing user behavior sequences into several subsequences. Such operations enable it to capture multi-level co-occurrence patterns, thus helping to improve its performance. TASI-GNN uses an adaptive denoising objective to learn the objective representation of a conversation from items and performs better than previous models. However, both models lack the ability to capture high-order feature representations.

在基线方法中,基于 GNN 的方法在 Diginetica 和 Nowplaying 数据集上表现出优异的结果。通过将每个会话序列视为子图,并使用 GNN 对项目进行编码,SR-GNN 和 GCE-GNN 展示了 GNN 在基于会话推荐中的优势。这突出了图建模在序列建模之上的优越性,表明使用双图来表示会话信息可以提取额外的见解并提高会话表示。Atten-Mixer 实现了令人印象深刻的性能。该模型通过将用户行为序列划分为几个子序列来突出项目共现关联。这些操作使其能够捕捉多级共现模式,从而有助于提高其性能。TASI-GNN 使用自适应去噪目标从项目学习对话的目标表示,并比先前模型表现更好。然而,这两个模型都缺乏捕捉高阶特征表示的能力。

The model we proposed, DDSG, is generally better than other SOTA models, except for the poor performance in the Tmall dataset. The reason is that the model we proposed not only uses two perspectives for modeling, but also uses a sparse attention mechanism for effective denoising, so that the model can not only denoise from a global perspective, but also perform micro-denoising from a local perspective within the session. In particular, on the Nowplaying dataset, our model outperforms the current best model by 107% in MRR@20 and 92% in P@20, which demonstrates the superior ability of our model in modeling sessions. The DDSG model models the sessions from two graph perspectives and performs denoising based on a sparse attention mechanism, and strengthens the session embedding, ultimately improving the accuracy of recommendations.

我们提出的模型,DDSG,总体上优于其他 SOTA 模型,除了在 Tmall 数据集上的表现较差。原因是,我们提出的模型不仅使用两种视角进行建模,还使用稀疏注意力机制进行有效去噪,从而使模型不仅能从全局视角进行去噪,还能在会话内部从局部视角进行微去噪。特别是,在 Nowplaying 数据集上,我们的模型在 MRR@20 上比当前最佳模型高出 107%,在 P@20 上高出 92%,这证明了我们模型在会话建模方面的优越能力。DDSG 模型从两个图视角对会话进行建模,并基于稀疏注意力机制进行去噪,加强了会话嵌入,最终提高了推荐精度。

6 Ablation experiment 6 消融实验

6.1 Efficiency on dual sparse graph attention mechanism for denoising

6.1 噪声消除双稀疏图注意力机制效率

To tackle the challenge of noisy data and improve the model's ability to capture key information, we propose DDSA. As illustrated in Fig. 3, the DDSG without DDSA shows subpar performance on the Nowplaying and Tmall datasets, underscoring the critical role of DDSA in mitigating the effects of noisy clicks within the two-layer graph channel. This dual-layer architecture significantly boosts the model's expressiveness. Furthermore, the target representation derived from the two-layer sparse attention mechanism is crucial for the inter-session channel. The original model employs this target representation to effectively filter out noise from the session relationship representation, thereby accurately recognizing adjacent sessions with similar intent. Our results highlight that DDSA is a vital component of the model, exerting a considerable influence on both channels. Notably, this variant surpasses the original model in MRR@20 for the Diginetica and Nowplaying datasets. However, we observe that when noisy items constitute a large portion of the session and user intent appears disorganized and ambiguous, the two-layer sparse attention mechanism may struggle to differentiate effectively between noisy and relevant items, potentially leading to counterproductive outcomes.

为了应对噪声数据挑战并提高模型捕捉关键信息的能力,我们提出了 DDSA。如图 3 所示,未使用 DDSA 的 DDSG 在 Nowplaying 和 Tmall 数据集上的表现不佳,突显了 DDSA 在减轻两层图通道中噪声点击影响方面的关键作用。这种双层架构显著提升了模型的表达能力。此外,由两层稀疏注意力机制推导出的目标表示对于会话间通道至关重要。原始模型使用这种目标表示来有效地从会话关系表示中过滤出噪声,从而准确识别意图相似的相邻会话。我们的结果表明,DDSA 是模型的一个关键组成部分,对两个通道都有相当大的影响。值得注意的是,这种变体在 Diginetica 和 Nowplaying 数据集的 MRR@20 上超过了原始模型。 然而,我们观察到当噪声项占会话的大部分时,用户意图显得混乱且模糊,两层稀疏注意力机制可能难以有效区分噪声项和相关信息项,可能导致事与愿违的结果。

6.2 Efficiency on relation graph

6.2 关系图上的效率

We suggest leveraging session relation graphs to help the present session identify analogous intent, so that improving the DDSG’s capacity to incorporate inter-session data. As illustrated in Fig. 3, our experiments indicate that DDSG without the relation graph module (RGM) shows a notable decline in performance compared to the original model across all three datasets. To harness the insights from the session graph, our model integrates both extra-session embeddings that describe session message and inter-session embeddings that indicate the inherent relationships among sessions, forming a comprehensive perspective. The absence of inter-session relational structure leads to a limited amount of final session information, which adversely affects model performance. Our findings highlight that inter-session information is essential for accurately constructing session embedding and assessing session intent similarity. This underscores the efficacy of incorporating session relation graphs in session recommendations and stresses the importance of enriching session representations by integrating inter-session information.

我们建议利用会话关系图来帮助当前会话识别类似意图,从而提高 DDSG 整合跨会话数据的能力。如图 3 所示,我们的实验表明,与原始模型相比,没有关系图模块(RGM)的 DDSG 在所有三个数据集上均表现出明显的性能下降。为了利用会话图的信息,我们的模型集成了额外的会话嵌入,这些嵌入描述了会话消息,以及表示会话之间固有关系的跨会话嵌入,从而形成一个全面的视角。缺乏跨会话关系结构会导致最终会话信息的数量有限,这不利于模型性能。我们的研究结果表明,跨会话信息对于准确构建会话嵌入和评估会话意图相似性至关重要。这强调了在会话推荐中引入会话关系图的效用,并强调了通过整合跨会话信息来丰富会话表示的重要性。

6.3 Efficiency on auxiliary enhancement module

6.3 辅助增强模块的效率

We introduced a modified version of the DDSG model, referred to as DDSG without AEM, to investigate how the absence of auxiliary representation affects overall model performance. As illustrated in Fig. 3, this variant exhibit lower performance metrics compared to the original model across three datasets. The auxiliary enhancement module identifies the top k most similar representations from the combined relation graph and session graph embeddings, emphasizing the current session's interests. This integration improves session representation accuracy by incorporating contextual features and offering additional insights for the final session representation.

我们引入了 DDSG 模型的修改版,称为无 AEM 的 DDSG,以研究辅助表示的缺失如何影响整体模型性能。如图 3 所示,这种变体在三个数据集上与原始模型相比,表现出较低的性能指标。辅助增强模块从组合关系图和会话图嵌入中识别出最相似的 k 个表示,强调当前会话的兴趣。这种集成通过结合上下文特征并为最终会话表示提供额外见解,提高了会话表示的准确性。

6.4 Efficiency on parameter

6.4 参数效率

In our work, we implement a multi-head attention mechanism to gather relevant attention information from sequences. We explored how varying the number of heads in this mechanism influences model performance. As illustrated in Fig. 4, which presents the effect of head count on the P@20 metric across three datasets, an increase in the number of heads generally allows the model to capture more session-related information, thereby enhancing performance. However, when the number of heads reaches 8, a decline in model effectiveness is observed. The optimal performance occurs when the number of heads is set to 4. Overall, adjusting the number of heads can significantly boost its performance.

在我们的工作中,我们实现了一种多头注意力机制来从序列中收集相关的注意力信息。我们探讨了该机制中头数的变化如何影响模型性能。如图 4 所示,该图展示了头数对三个数据集上 P@20 指标的影响,头数的增加通常使模型能够捕获更多与会话相关的信息,从而提高性能。然而,当头数达到 8 时,观察到模型的有效性下降。当头数设置为 4 时,性能达到最佳。总的来说,调整头数可以显著提升其性能。

Then, in order to explore the relationship between the number of heads and other parameters, we conducted experiments with different batch sizes, and the experimental results were shown in Fig. 4. We used the Diginetica dataset to carry out experiments with three different head numbers. Experiments show that the accuracy rate increases with the increase of batch size, but decreases when increasing to 1024. It works best when the number of heads is 4 and the batch size is 512. There is a certain relationship between different parameters. Although the increase of the number of heads can make the model process more information, it may increase the computational complexity. The increase of batch size may improve the computational efficiency but may also cause the model to converge to a poor local optimal solution. Make full use of the relationship between each parameter, and gradually adjust each parameter will make the model achieve the best effect.

然后,为了探索头数与其他参数之间的关系,我们进行了不同批量大小的实验,实验结果如图 4 所示。我们使用 Diginetica 数据集进行了三种不同头数的实验。实验表明,随着批量大小的增加,准确率提高,但增加到 1024 时则下降。当头数为 4 且批大小为 512 时效果最佳。不同参数之间存在一定的关系。虽然增加头数可以使模型处理更多信息,但可能会增加计算复杂度。增加批大小可能会提高计算效率,但也可能导致模型收敛到较差的局部最优解。充分利用每个参数之间的关系,并逐步调整每个参数,可以使模型达到最佳效果。

7 Conclusion 7 结论

In this study, we introduce a novel denoising dual sparse graph attention model for session-based recommendations, referred to as DDSG. This model utilizes a denoising dual sparse graph attention network to integrate insights from both external and internal session viewpoints, thereby improving the representation of session targets and mitigating noise interference. Comprehensive experiments across three datasets reveal that the DDSG model significantly surpasses existing leading models. Looking ahead, we plan to refine our sparse graph denoising methods to enhance the utilization of inherent relationships within the relational graph.

在这项研究中,我们介绍了一种新颖的用于基于会话推荐的降噪双稀疏图注意力模型,称为 DDSG。该模型利用降噪双稀疏图注意力网络来整合外部和内部会话观点的见解,从而提高会话目标的表示并减轻噪声干扰。在三个数据集上的全面实验表明,DDSG 模型显著优于现有领先模型。展望未来,我们计划改进我们的稀疏图降噪方法,以增强关系图内固有关系的利用。

Data availability 数据可用性

The datasets generated during the current study are available from the corresponding author on reasonable request.

当前研究期间生成的数据集,在合理请求下,可从对应作者处获得。

Abbreviations 缩写

- SBR: SBR:

-

Session-based recommendation

基于会话的推荐 - RNN: RNN:

-

Recurrent neural networks

循环神经网络 - LSTM: LSTM

-

Long short-term memory networks

长短期记忆网络 - GAT: GAT:图注意力网络

-

Graph attention networks

图注意力网络 - GNN: GNN:图神经网络

-

Graph neural networks 图神经网络

- MC:

-

Markov chains 马尔可夫链

- GRU: GRU

-

Gated recurrent unit 门控循环单元

- GGNN: GGNN:

-

Gated graph neural networks

门控图神经网络 - DDSG: DDSG:

-

Our proposed model, denoising dual sparse graph attention model

我们提出的模型,去噪双稀疏图注意力模型 - DDSA: DDSA:

-

Denoising dual sparse attention

去噪双稀疏注意力 - RGM: RGM:

-

Relation graph module 关系图模块

- AEM: AEM:

-

Auxiliary enhancement module

辅助增强模块

References 参考文献

Wang SJ, Cao LB, Wang Y, et al. A survey on session-based recommender systems. ACM Comput Surv. 2021;54(7):1–38.

王思佳,曹立波,王宇,等. 基于会话的推荐系统综述. ACM 计算机调查. 2021;54(7):1–38.Sherstinsky A. Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Physica D. 2020;404: 132306.

Sherstinsky A. 循环神经网络(RNN)和长短期记忆(LSTM)网络基础。Physica D. 2020;404: 132306.Yu Y, Chen J, Gao T, et al. DAG-GNN: DAG structure learning with graph neural networks. In: Proceedings of the 36th International conference on machine learning, 2019: 7154–7163.

Yu Y, Chen J, Gao T, et al. DAG-GNN:使用图神经网络进行有向无环图结构学习。In:第 36 届国际机器学习会议论文集,2019:7154–7163.Veličković P, Cucurull G, Casanova A, et al. Graph attention networks. In: Proceedings of the 6th International Conference on Learning Representations, 2018; 1–12.

Veličković P, Cucurull G, Casanova A, 等人. 图注意力网络。载于:第 6 届国际学习表示会议论文集,2018;1–12。Ching WK, Ng MK. Markov chains: models, algorithms and applications, 2006.

Ching WK, Ng MK. 马尔可夫链:模型、算法与应用,2006.Rendle S, Freudenthaler C, Schmidt-Thieme L. Factorizing personalized markov chains for next-basket recommendation. In: Proceedings of the 19th International Conference on World Wide Web, 2010: 811–820.

Rendle S, Freudenthaler C, Schmidt-Thieme L. 基于个性化马尔可夫链的下一篮子推荐分解。载于:第 19 届国际万维网会议论文集,2010:811–820。Hidasi B, Karatzoglou A, Baltrunas L, et al. Session-based recommendations with recurrent neural networks. In: Proceedings of the 4th International Conference on Learning Representations, 2016: 1–10.

Hidasi B, Karatzoglou A, Baltrunas L, 等. 基于会话的推荐与循环神经网络。载于:第 4 届国际学习表示会议论文集,2016:1–10.Tan YK, Xu XX, Liu Y. Improved recurrent neural networks for session-based recommendations. In: Proceedings of the 1st Workshop on Deep Learning for Recommender Systems, 2016: 17–22.

Tan YK, Xu XX, Liu Y. 基于会话的推荐中改进的循环神经网络。载于:2016 年第 1 届深度学习推荐系统研讨会论文集,17–22。Li J, Ren PJ, Chen ZM, et al. Neural attentive session-based recommendation. In: Proceedings of the 26th ACM International Conference on Information and Knowledge Management, 2017: 1419–1428.

李杰,任鹏举,陈志明,等. 基于神经注意力的会话推荐. 载于第 26 届 ACM 国际信息与知识管理会议论文集,2017: 1419–1428.Song J, Shen H, Ou ZJ, et al. ISLF: interest shift and latent factors combination model for session-based recommendation. In: Proceedings of 28th International Joint Conference on Artificial Intelligence, 2019: 5765–5771.

宋杰,沈浩,欧志坚,等. ISLF:基于兴趣转移和潜在因子组合的会话推荐模型. 载于第 28 届国际人工智能联合会议论文集,2019: 5765–5771.Wang SJ, Hu L, Wang Y, et al. Modeling multi-purpose sessions for next-item recommendations via mixture-channel purpose routing networks. In: Proceedings of 28th International Joint Conference on Artificial Intelligence, 2019: 3771–3777.

王思佳,胡磊,王宇,等. 通过混合通道目的路由网络建模多用途会话以进行下一项推荐. 载于第 28 届国际人工智能联合会议论文集,2019: 3771–3777.Wang SJ, Hu L, Wang Y, et al. Sequential recommender systems: challenges, progress and prospects. In: Proceedings of 28th International Joint Conference on Artificial Intelligence, 2019: 6332–6338.

王思佳,胡丽,王勇,等. 序列推荐系统:挑战、进展与展望. 载于:第 28 届国际人工智能联合会议论文集,2019:6332–6338.Liu Q, Zeng YF, Mokhosi R, et al. STAMP: short-term attention/memory priority model for session-based recommendation. In: Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, 2018: 1831–1839.

刘强,曾宇飞,莫科西,等. STAMP:基于会话的推荐短期注意力/记忆优先级模型. 载于:第 24 届 ACM SIGKDD 国际知识发现与数据挖掘会议论文集,2018:1831–1839.Vaswani A, Shazeer N, Parmar N, et al. Attention is all you need. In: Proceedings of the 31st International Conference on Neural Information Processing Systems, 2017: pp.6000–6010.

瓦桑尼,沙泽尔,帕尔马尔,等. 注意力即是所需一切. 载于:第 31 届国际神经信息处理系统会议论文集,2017:pp.6000–6010.Kang WC, Mcauley J. Self-attentive sequential recommendation. In: Proceedings of the 18th IEEE International Conference on Data Mining, 2018: 197–206.

康伟成,麦克奥利,自我注意力序列推荐. 载于:第 18 届 IEEE 国际数据挖掘会议论文集,2018:197–206.Luo AJ, Zhao PP, Liu YC, et al. Collaborative self-attention network for session-based recommendation. In: Proceedings of 29th International Joint Conference on Artificial Intelligence, 2020: 2591–2597.

Luo AJ, Zhao PP, Liu YC, 等. 基于会话的推荐协同自注意力网络。载于:第 29 届国际人工智能联合会议论文集,2020:2591–2597。Ying HC, Zhuang FZ, Zhang FZ, et al. Sequential recommender system based on hierarchical attention network. In: Proceedings of the 27th International Joint Conference on Artificial Intelligence, 2018: 3926–3932.

Ying HC, Zhuang FZ, Zhang FZ, 等. 基于层次注意力网络的序列推荐系统。载于:第 27 届国际人工智能联合会议论文集,2018:3926–3932。He ZK, Zhao HD, Lin Z, et al. Locker: locally constrained self-attentive sequential recommendation. In: Proceedings of the 30th ACM International Conference on Information & Knowledge Management, 2021: 3088–3092.

He ZK, Zhao HD, Lin Z, 等. Locker:局部约束自注意力序列推荐。载于:第 30 届 ACM 国际信息与知识管理会议论文集,2021:3088–3092。Wu S, Tang YY, Zhu YQ, et al. Session-based recommendation with graph neural networks. In: Proceedings of the AAAI Conference on Artificial Intelligence, 2019: 33(01): 346–353.

Wu S, Tang YY, Zhu YQ, 等. 基于图神经网络的会话推荐。载于:2019 年 AAAI 人工智能会议论文集,第 33 卷第 1 期:346–353。Xu CF, Zhao PP, Liu YC, et al. Graph contextualized self-attention network for session-based recommendation. In: Proceedings of 28th International Joint Conference on Artificial Intelligence, 2019: 3940–3946.

Yu F, Zhu YQ, Liu Q, et al. TAGNN: target attentive graph neural networks for session-based recommendation. In: Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, 2020: 1921–1924.

Qiu RH, Li JJ, Huang Z, et al. Rethinking the item order in session-based recommendation with graph neural networks. In: Proceedings of the 28th ACM International Conference on Information and Knowledge Management, 2019: 579–588.

Wang ZY, Wei W, Cong G, et al. Global context enhanced graph neural networks for session-based recommendation. In: Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, 2020: 169–178.

Wang JL, Ding KZ, Zhu ZW, et al. Session-based recommendation with hypergraph attention networks. In: Proceedings of the 2021 SIAM International Conference on Data Mining, 2021: 82–90.

Li YF, Gao C, Luo HL, et al. Enhancing hypergraph neural networks with intent disentanglement for session-based recommendation. In: Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, 2022: 1997–2002.

Han QL, Zhang C, Chen R, et al. Multi-faceted global item relation learning for session-based recommendation. In: Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, 2022: 1705–1715.

Li ZH, Wang XZ, Yang C, et al. Exploiting explicit and implicit item relationships for session-based recommendation. In: Proceedings of the 16th ACM International Conference on Web Search and Data Mining, 2023: 553–561.

Zhang CK, Zheng WJ, Liu Q, et al. SEDGN: sequence enhanced denoising graph neural network for session-based recommendation. Expert Syst Appl. 2022;203: 117391.

Dai JQ, Yuan WH, Bao C, et al. DGNN: denoising graph neural network for session-based recommendation. In: Proceedings of IEEE 9th International Conference on Data Science and Advanced Analytics, 2022: 1–8.

Hua SS, Gan MX. Intention-aware denoising graph neural network for session-based recommendation. Appl Intell. 2023;53(20):23097–112.

Correia GM, Niculae V, Martins AFT. Adaptively sparse transformers. In: Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, 2019: 2174–2184.

Yuan JH, Song ZH, Sun MY, et al. Dual sparse attention network for session-based recommendation. In: Proceedings of the 35th AAAI Conference on Artificial Intelligence, 2021: 4635–4643.

Wang JJ, Xie HR, Wang FL, et al. Jointly modeling intra-and inter-session dependencies with graph neural networks for session-based recommendations. Inf Process Manage. 2023;60(2): 103209.

Liu YY, Liu Q, Tian Y, et al. Concept-aware denoising graph neural network for micro-video recommendation. In: Proceedings of the 30th ACM International Conference on Information & Knowledge Management, 2021: 1099–1108.

Ding CX, Zhao ZY, Li C, et al. Session-based recommendation with hypergraph convolutional networks and sequential information embeddings. Expert Syst Appl. 2023;223: 119875.

Chen TW, Wong RCW. Handling information loss of graph neural networks for session-based recommendation. In: Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, 2020: 1172–1180.

Sarwar B, Karypis G, Konstan J, et al. Item-based collaborative filtering recommendation algorithms. In: Proceedings of the 10th International Conference on World Wide Web, 2001: 285–295.

Wang MR, Ren PJ, Mei L, et al. A collaborative session-based recommendation approach with parallel memory modules. In: Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval, 2019: 345–354.

Huang C, Chen JH, Xia LH, et al. Graph-enhanced multi-task learning of multi-level transition dynamics for session-based recommendation. Proc AAAI Conf Artif Intell. 2021;35(5):4123–30.

Xia X, Yin HZ, Yu JL, et al. Self-supervised hypergraph convolutional networks for session-based recommendation. Proc AAAI Conf Artif Intell. 2021;35(5):4503–11.

Hou YP, HU BB, Zhang ZQ, et al. CORE: simple and effective session-based recommendation within consistent representation space. In: Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, 2022: 1796–1801.

Zhang PY, Guo JY, Li CZ, et al. Efficiently leveraging multi-level user intent for session-based recommendation via atten-mixer network. In: Proceedings of the 16th ACM International Conference on Web Search and Data Mining. 2023: 168–176.

Qiao ST, Zhou W, Luo FJ, et al. Noise-reducing graph neural network with intent-target co-action for session-based recommendation. Inf Process Manage. 2023;60(6): 103517.

Funding

This paper is fully supported by the following funds: National Natural Science Foundation of China under Grant No.62166032; Natural Science Foundation of Qinghai Province under Grant No.2022-ZJ-961Q.

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wu, B., Zhu, Y. Denoising dual sparse graph attention model for session-based recommendation. Discov Computing 28, 29 (2025). https://doi.org/10.1007/s10791-025-09532-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10791-025-09532-2